The mandated decay imposed on my previous experiment due to restricting Twitter searches to no more than a week (or so) in the past had me thinking of a more permanent method to archive tweets and their geo-location information. On the same day I wrapped up SF Tweets the World Cup 2010, Andrew at SimpleGeo wrote about how to map Foursquare checkins to a SimpleGeo layer. This way the checkins are all visible on one layer and the user can perform spatial queries on them.

Andrew’s example Python script looked simple enough that I took it as a base to do the same as I’d essentially done with the World Cup tweets, but instead of plotting tweets on a Google map they would be inserted into a SimpleGeo layer. This time, rather than searching on a keyword and collecting any returned results, the purpose was to collect tweets from a Twitter user, in this case, my own.

I could have accessed the Twitter API directly as I did for the World Cup tweets, but the availability of some simple and excellent Twitter clients/libraries for Python made it too easy to avoid. I quickly found a few and settled on Tweepy, by joshthecoder. It’s described as a Python library with “complete coverage” of the Twitter API. And just as cool, it has reasonably detailed documentation. Sold!

The first step was to get Tweepy returning tweets. For a single user’s timeline Tweepy provides the method tweepy.api.user_timeline, one of several timeline methods. By default this returns the last 20 tweets as objects available for manipulation, including the fields of interest here such as coordinates, if available, the tweet ID, date and time, and so on. It’s quite easy to get Tweepy talking with Twitter and in minutes I had my latest 20 tweets on the screen. Since I’m no Python guru, it took me a bit longer to get familiar with the objects returned to me and their available attributes and methods. Someone even slightly Python-adept could have breezed through this in just a few clock cycles, but it’s part of the learning process for me.

Assigning latitude and longitude is pretty easy as long as the tweet has the associated tweet.geo data. As I noted in the World Cup blog post, this is often not the case. This was an important reason to plot my own tweets: I have control over the geotagging of each tweet, and don’t have to depend on others to do it. If I were to grab tweets from the search API or the public timeline, it’s likely a small percentage would have latitude and longitude information.

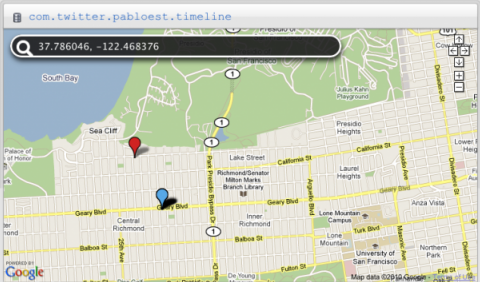

But wait, what about Twitter places? Yes, users can attach place-based geographic information to their tweets. This allows the user to reveal the general location from which the tweet is sent without revealing the exact coordinates. Naturally, a bounding box described this area on a map, and Twitter has associated a unique URL for each place. In my case, many of my tweets are sent from the Richmond in San Francisco. The XML attached to my tweets sent from the Richmond and identified as such is this:

<twitter:place xmlns:georss="http://www.georss.org/georss"> <br /><twitter:id>64be9bb264eb76c1</twitter:id> <twitter:name>Central Richmond</twitter:name> <twitter:full_name> Central Richmond, San Francisco </twitter:full_name> <twitter:place_type> neighborhood </twitter:place_type> <twitter:url> http://api.twitter.com/1/geo/id/64be9bb264eb76c1.json </twitter:url> <twitter:attributes> <twitter:bounding_box> <georss:polygon>37.77212997 -122.49240012 37.77212997 -122.47178184 37.78442901 -122.47178184 37.78442901 -122.49240012</georss:polygon> </twitter:bounding_box> <twitter:country code="US">The United States of America</twitter:country> <br /></twitter:attributes></twitter:place>

A bounding box like this is not difficult to deal with, but there should be some consideration on how the information is presented when it is in the same context as markers on a map with lat and lon coordinates. But that’s a digression…

Once the proper modules are imported, the two key components to call are the SimpleGeo Client(MY_OAUTH_KEY, MY_OAUTH_SECRET) and the Tweepy tweepy.api.user_timeline(MY_TWITTER_USERNAME). All that basically remains is to throw objects from one universe into the other. Tweepy returns status objects and the SimpleGeo client adds records to a database.

My own tweets don’t always have associated coordinates, so even though I can choose to tag or not tag a tweet with coordinates, it’s smart to check and ensure a tweet has the necessary properties before trying to add it to a layer. If a tweet has coordinates, its status object will have an array in tweet.geo['coordinates'] of the form [lat,lon]. That’s simple to verify and require before calling the function to insert the object into the layer. Some other properties are useful: the tweet’s URL, the place (this could be useful later even if the lat,lon coordinates are included), the text of the tweet itself, and the time-stamp.

I ran into one hiccup with the time-stamp with regard to time zones and offsets. Each Tweepy status returns a time-stamp in GMT form. When I first added these to the SimpleGeo layer they had an offset of +7 hours (the magnitude of the difference between PDT and GMT zones). To account for this I manually subtracted the time zone offset from the tweet’s time-stamp, and that fixed it. I peeked at the Tweepy code to see if I could do it more elegantly, but I’m not sure I understand how it handles locale as there is some comment about backward-compatibility with Python 2.4.

It’s also quite simple to delete records and I did this a few times as an experiment and to check I was manipulating the layer’s objects correctly. A simple call to SimpleGeo’s client.delete_record will delete the object by passing the layer and object ID.

I didn’t change anything from Andrew’s Foursquare example to add the objects into the record and it worked! Next steps might be to add duplicate record checking, pagination, and spatial queries into the SimpleGeo layer. Thanks to Andrew for his help on getting my example to work – he gave me some pointers and was readily available to help me out.

The .py file is available here: http://gist.github.com/474741